Within reach

In the booming field of neuroprosthetics, new work shows that deploying brain-machine interfaces may be simpler, and the results more naturalistic, than expected. The brain is well equipped to adopt a robotic arm—or just about anything else you can plug into it.

Plugging a machine directly into a human brain—whether to allow a paralyzed patient to move a cursor and drive a wheelchair, to direct an artificial limb or to permit wireless brain-to-brain communication—is a Rubicon of human-machine intimacy. And we are crossing it.

Just a decade ago, when Jose Carmena went to his first Society for Neuroscience meeting, in Orlando, Florida, only a few engineers and neuroscientists had the audacity to imagine that a plug-and-play brain-machine interface (BMI)—a kind of USB port to the brain—would be possible in the foreseeable future. “There was just one small session on one afternoon in one small corridor with some posters,” says Carmena, now an associate professor in the Department of Electrical Engineering and Computer Sciences (EECS) and in the Helen Wills Neuroscience Institute at Berkeley.

In contrast, the same conference last year was all BMI all the time. “There was a session pretty much every day. Often there were overlapping sessions with posters and talks. It has been a huge transformation,” says Carmena.

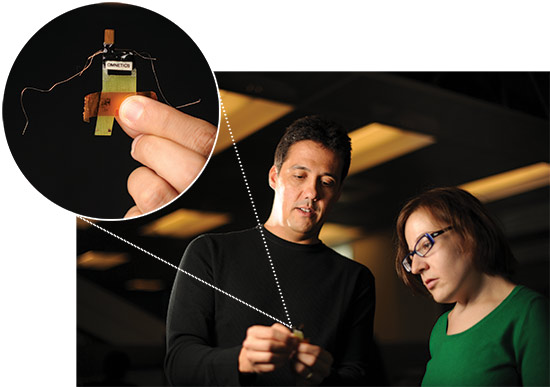

Jose Carmena, director of the Brain-Machine Interface Systems Lab and co-director of the Berkeley-UCSF Center for Neural Engineering and Prostheses (CNEP), holds a neural interface (inset) while Amy Orsborn, a bioengineering graduate student in Carmena’s lab, looks on. The neural interface is surgically implanted on the motor cortex, the part of the brain that controls movement.Advances in materials science, machine learning, signal processing, robotics, neurosurgery, neuroscience and micro-electronics are all coming together to make BMI a reality, says Carmena, director of the Brain-Machine Interface Systems Lab and co-director of the Berkeley-UCSF Center for Neural Engineering and Prostheses (CNEP).

Jose Carmena, director of the Brain-Machine Interface Systems Lab and co-director of the Berkeley-UCSF Center for Neural Engineering and Prostheses (CNEP), holds a neural interface (inset) while Amy Orsborn, a bioengineering graduate student in Carmena’s lab, looks on. The neural interface is surgically implanted on the motor cortex, the part of the brain that controls movement.Advances in materials science, machine learning, signal processing, robotics, neurosurgery, neuroscience and micro-electronics are all coming together to make BMI a reality, says Carmena, director of the Brain-Machine Interface Systems Lab and co-director of the Berkeley-UCSF Center for Neural Engineering and Prostheses (CNEP).

Earlier this year, a group at Brown University and Massachusetts General Hospital succeeded in helping a quadriplegic patient guide a robotic arm with a BMI to pick up a water bottle and bring it to her mouth. At UCSF, neurosurgeon and CNEP co-director Edward Chang and his colleagues are currently developing a speech prosthetic that Chang believes will soon be able to read words from a paralyzed patient’s brain and convert them into a synthesized voice.

Despite such progress, one benchmark of success remains frustratingly defiant: the easy and natural operation of a fully articulated prosthetic arm that can be controlled directly by its user’s intentions. Celebrated robotic arms, such as the super-high-tech DARPA arm, are terrific in theory, but amputees who try them regularly revert to the simpler, lighter, less expensive and much easier-to-use hooks from half a century ago. Prostheses that rely on foot controls or other outside-the-brain manipulations require a level of concentration disproportionate to the control they deliver. Similarly, the robotic arm and its BMI unveiled at Brown earlier this year require intense focus to conduct even the simplest tasks. You don’t want to have to try with all your might just to raise a cup to your lips.

“The field now needs to transform BMI systems from one-of-a-kind prototypes into clinically proven technology like pacemakers and cochlear implants,” Carmena wrote in the March 2012 issue of IEEE Spectrum. “We want a device that is essentially plug-and-play.”

At Carmena’s BMI Systems Lab and at CNEP, a new model is emerging that may make that vision a reality. This January, Carmena and colleagues published a Nature paper demonstrating that with appropriate feedback, rats can weave a BMI device seamlessly into their own neuronal environments so that using it becomes second nature. Not only do parts of the motor cortex quickly adapt so that they can direct a cursor, but neural activity in other brain structures, such as the dorsolateral striatum (related to automatized skill learning, habit formation and motor skill learning) are also engaged.

That these BMI-proficient animals have turned-on dorsolateral striata suggests that use of their new brain add-ons has become “internalized” and automatic, says Amy Orsborn, a graduate student in Carmena’s lab.

If the BMI Systems Lab can make a prosthetic arm’s use second nature, and if Carmena can design it so that it sends sensory feedback from the hand back to the brain, the lab will have achieved a kind of gold standard for BMI.

Carmena believes that achievement will not stem simply from developing more complicated algorithms or neuronal decoders. Rather, it will come from letting the brain take the lead in learning how to incorporate a new device naturally and then augmenting that with artificial decoders that can be adapted online.

His approach plugs the BMI into the brain and lets the brain learn to use it by providing it with the feedback key to all adaptive neural activity. When certain neurons fire, the prosthesis goes up. The subject’s brain, given clear feedback, senses that and quickly learns to fire those neurons on command. The brain will try new combinations; if they work, they will get stronger, if they don’t, they will weaken.

Like learning to play tennis or ride a bike, the process is gradual and requires no step-by-step planning. “It’s like it has become an extended part of the user,” Carmena says.

“Instead of us trying to decode the neurons,” says Orsborn, “we let the neurons themselves figure out what they need to do to produce skilled BMI controls. And over 10 days or so, they learn to play BMI.” Rats described in the Nature paper learned to direct a “sound cursor” that creates specific tones that, once achieved, would release specific rewards. Primates in the BMI lab have since learned to do the same thing with both a computer cursor and a robotic arm.

This insight is a windfall for BMI researchers, says UCSF’s Chang. If they can get reliable, high-quality information about the world to the brain, then the brain does the computational heavy lifting.

“Neuroplasticity takes care of incorporating the prosthetic into the brain’s real estate. Simultaneously adapting the decoder lets the user learn faster and boost performance.”

– Jose Carmena

Since the Nature paper, Carmena’s lab has also been developing ways to amplify that natural process with algorithms that adjust the decoder to the brain’s own adaptations to the new data. The idea is to get the brain and the BMI’s decoder each adapting to and accommodating the other toward the same aim, the automatic coordinated motion of the prosthetic arm.

“Synergizing these two adaptive mechanisms is key,” says Carmena. “In this approach, neuroplasticity takes care of incorporating the prosthetic into the brain’s real estate. Simultaneously adapting the decoder lets the user learn faster and boost performance.”

Carmena and his team are applying these principles to a new collaboration, funded late last year by a $2 million National Science Foundation grant. Working with EECS professor Claire Tomlin, mechanical engineering professor Masayoshi Tomizuka and what Carmena calls “an army of excellent students and post-docs,” they are developing an interface control for an exoskeletal arm that may someday allow paralyzed patients to “perform tasks of daily living.” Users would wear the device over their immovable arm and would manipulate it, via BMI, with their minds alone.

“Making a good enough BMI to naturally drive a prosthetic arm has exciting problems in pretty much every discipline from every part of campus,” says Carmena. First, there’s the basic neuroscience. You have to adequately understand what’s going on in the brain itself. Psychology and neuroscience professor Robert Knight is a giant in that field and a key player at CNEP. Chang, one of Knight’s protégés, is another. And Carmena himself is focused on understanding the basic science around neuroplasticity, or the brain’s ability to change and adapt.

Then there are the material and engineering requirements of the electrodes implanted in the brain. These have to remain immobilized so they continue to read from the same cohort of neurons. They must be dense and sensitive enough to read brain activity at a high resolution, and they must not damage the brain itself even though they are placed on or into it. Finally, since they require neurosurgery to install or repair, they need to do all of these things for a lifetime.

Michel Maharbiz, assistant professor of EECS, is developing miniature grids of implantable electrodes that record at very high resolution. The challenge is in the “plumbing,” as he calls it, of getting electrodes and neurons to interface seamlessly. Pushing the boundaries of such meshing in one recent experiment, Maharbiz inserted a porous electrode into a pupating larval beetle’s eye tissue, which grew around and through it so that the electrode “was like a part of the eye itself,” he says.

Then there are the microcircuits that do preliminary processing of the signals coming from the electrodes. These need to be small enough (in a wireless version) to be positioned beneath the skull without displacing brain tissue, use very little power and to be rechargeable through the skull.

Maharbiz works closely with both Carmena and EECS professor Jan Rabaey, who is developing a wireless chip that can transmit information directly from each of Maharbiz’s electrodes or from a network of them. Going wireless means patients will be less prone to infection and won’t have to contend with wires coming out of their heads.

Then there is the decoder itself, a program that interprets the brain signals and sends commands to (and eventually from) the prosthetic. The decoder and the algorithms that define it are Carmena’s bailiwick, along with the basic neuroscience. And finally, there is the prosthetic itself, in this case a robotic or exoskeletal arm.

Today, most prostheses rely only on visual feedback. But when a person learns to do something like wash her face, she uses tactile senses and a proprioception system that connect to receptors on muscles and joints that tell them where her hands are in space and what they are doing. Carmena is learning to simulate tactile sensation and proprioception with something called intracortical electrical microstimulation. He is sending signals back from the prosthetic hand and arm to appropriate parts of the brain. His former grad student Subramaniam Venkatraman conducted experiments that delivered a tiny reinforcing stimulus to a rat subject’s cortex every time the rat hit a target with its whisker, which the rat could not sense. The rat quickly learned to precisely hit targets with that whisker in exchange for a drop of fruit juice. In effect, it learned to track the whereabouts of the end of a whisker it could not feel.

Carmena is wary of raising expectations too high too soon. At a recent Christopher Reeve Foundation conference where he gave a talk about his BMI work, paralyzed patients in the audience were looking at him, he says, “with eyes that said ‘OK, when is this going to be ready?’” But help for patients suffering from spinal cord injury, amyotrophic lateral sclerosis or stroke, for example, may still be a decade or more away.

On the other hand, so many of the bottlenecks are being addressed at the same time, in parallel, and by the best people in the business. “It could,” Carmena says, “move along very fast.”

Integrating human brains, computers and the machines those computers control have begun in the clinical realm, but few on the inside expect it to end there. If a plug-and-play BMI is routinized and commercialized, all kinds of non-medical applications may follow. Surely there will be challenging legal and ethical implications, as well.

We may be crossing a Rubicon of human-machine intimacy, but what happens on the far side of the river is up for grabs.