Moments of untruth

Humans have been manipulating photos for as long as we’ve had cameras. But political and social forces have been using images to manipulate us for nearly as long.

By 1865, photojournalist Matthew Brady had already mastered the art of photo manipulation, as he demonstrated in a studio portrait of eight Civil War generals — including one who wasn’t actually there. Later, a 1920 photograph of Vladimir Lenin addressing troops in Moscow was famously modified by Soviet censors to remove state opponent Leon Trotsky. Over the decades, we’ve seen a slew of altered images created by intelligence agencies and governments around the world, usually for dubious purposes.

But in recent years, something has changed. Now, virtually anyone can manipulate a photo well enough to go undetected, thanks to sophisticated, affordable and user-friendly editing tools. And, increasingly, they can do the same with video. With the broad reach of the internet and social media, this modified and misleading content can spread more quickly and have far greater impact than it did in the past.

The potential harm from this technology — to individuals, democratic elections and even the idea of truth itself — means that the stakes are higher than ever. But Berkeley researchers, using groundbreaking technologies, are taking a leading role in developing powerful techniques to analyze and authenticate visual media – tools that will allow us to better distinguish the fake from the real.

Detecting deepfakes

Many of us have watched as fake and modified videos have paraded across our social media feeds: actor Jordan Peele ventriloquizing President Barack Obama; House Speaker Nancy Pelosi slurring her speech; Facebook CEO Mark Zuckerberg boasting about using stolen data.

Like fake photos, digitally manipulated videos can be compelling enough to gain traction online. Yet modified videos are still relatively easy to spot — particularly those featuring high-profile people made to do or say something new.

But for how much longer? Videos synthesized through artificial intelligence (AI), commonly known as deepfakes, are improving in realism. There will likely come a time — perhaps not so far from now — when the naked eye can no longer tell an original video from a modified one.

That’s where Hany Farid, professor of electrical engineering and computer sciences and at the School of Information, comes in. He’s a pioneer and world expert in digital forensics — determining if photos, and increasingly videos, are real or fake. Over the past 21 years, he has developed mathematical and computational algorithms to detect tampering in digital media.

His job is getting harder all the time. Rapid advances in machine learning have made it easier than ever for average people to create seamless, believable fake photos and videos. “We know that this technology to create fake content and distribute it is developing very, very quickly. There’s no question about that,” Farid says. “That means that the threat is increasing.”

He’s trying to stay at least one step ahead. The latest method out of his lab, for example, uses sophisticated modeling to detect deepfakes of U.S. politicians, including Donald Trump, Joe Biden, Cory Booker, Kamala Harris, Bernie Sanders and Elizabeth Warren.

By feeding a model with hours of authentic video footage, Farid and Ph.D. student Shruti Agarwal trained it to recognize each individual based on a frame-by-frame analysis of 18 facial movement and two head movement parameters, including how and when they raise and lower their eyebrows, wrinkle their nose and tighten their lips. Because these facial movements represent “very particular glitches that cannot be replicated easily by AI tools,” Agarwal says, the model uses them to verify if the individual depicted in a given video clip is the real thing or some sort of impostor.

“We saw the infamous Jordan Peele impersonation of Obama [in April 2018], and that video was really good quality at that time,” she says. “We realized that with the development of new AI tools, this technique was going to improve, and it would be very difficult for an average person, or even an expert, to tell whether a video is a fake or a real one. That can be used for lots of nefarious purposes.”

The detection technique isn’t foolproof, Agarwal notes, but should stop most people from creating a passable deepfake — at least for now. Other approaches to detecting digital fakery involve searching for artifacts or signatures left behind by AI tools, she notes, such as blurring in areas where manipulation has taken place, particularly around the face.

Farid has worked on many of these. But to retain an advantage in his corner, he keeps some of his tools under wraps, in a personal digital forensics arsenal he can deploy when called upon by news organizations, social media companies or others to detect a particularly high-quality fake.

When publishing Agarwal’s model, the pair decided not to release the actual data or code. “We don’t want this thing getting operationalized against us,” Farid says. “We will share it with very specific forensic researchers, but not everybody.”

Detecting fakery is a task he takes seriously. But he also believes that in the long run, technology will never solve the bigger problem of misinformation. Preserving our faith in legitimate news organizations, democratic elections and the concept of truth itself will take policy, regulation and education, too. “Once we enter a world where everything can be faked, then nothing’s real,” he says, “and everything has a sense of plausible deniability.”

A machine learning classifier detects images that use Face-Aware Liquify, a photo editing tool. (Photos courtesy the researchers)

A machine learning classifier detects images that use Face-Aware Liquify, a photo editing tool. (Photos courtesy the researchers)

“A competitive collaboration”

Farid, of course, is not the only one fighting this battle. He’s joined by professor of electrical engineering and computer sciences Alexei Efros, whose lab works on synthesizing as well as detecting fake content simultaneously — and sometimes in the same paper.

Efros believes a healthier orientation with the truth can be found somewhere between the poles of real and fake. “The nature of science is a marketplace of ideas,” he says. “You need to have multiple points of view, and you need to have them clash, and you need to have them challenged. In that sense, doing work on both sides of synthesis and detection is what propels the sides forward. It’s a competitive collaboration, because in science it’s really all about finding the truth.”

To wit, consider three recent advances out of his lab: a method for detecting the use of a facial-manipulation tool in Adobe Photoshop, then reverting back to the original; a technique for transferring dance movements from one person to another, plus another for detecting if such a manipulation has been performed; and an approach toward learning individual styles of conversational hand gestures that could be used both to detect deepfakes, in a similar manner to Agarwal’s approach, or to synthesize them, by animating realistic hand gestures to go along with modified speech.

The Photoshop paper, authored by Efros, postdoctoral researcher Andrew Owens, undergraduate researcher Sheng-Yu Wang and two colleagues from Adobe, presents a novel way of detecting if a photograph has been modified by a photo editing tool called Face-Aware Liquify.

Using a simple but powerful interface, this tool allows users to alter a subject’s facial expression by changing their eyes, mouth and other features. Just last year, the Kremlin released an image of North Korean leader Kim Jong Un smiling and shaking hands with Russian Foreign Minister Sergey Lavrov — when really Kim was scowling. That’s Face-Aware Liquify at work, Efros says. The team’s innovation was to use the script behind this trick to train a machine learning classifier to detect and deconstruct fakes.

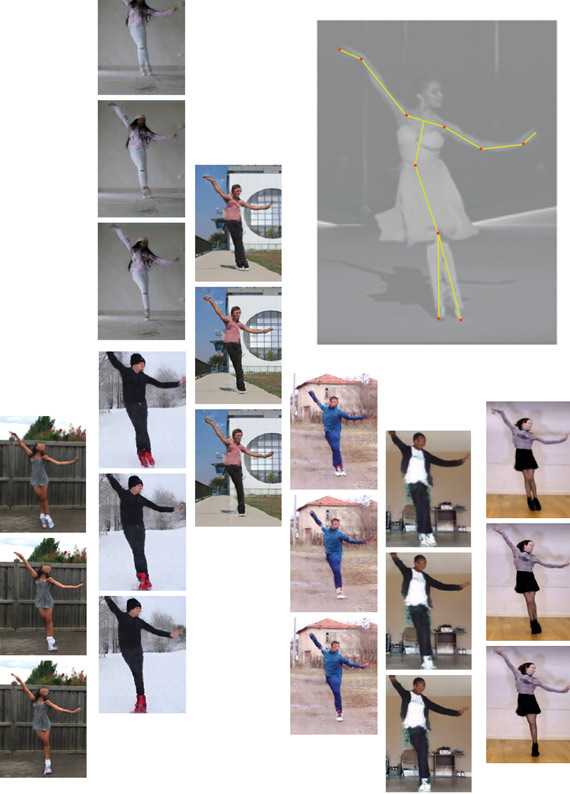

A motion-synthesis method digitally transfers dance moves from one individual to another — and can test when a video has used it. (Photos courtesy the researchers)Caroline Chan, an undergraduate student at the time, was the lead author of the second paper, “Everybody Dance Now,” which describes a method for transferring video motion from one person to another. Given a source video of a person dancing, the researchers showed they could digitally transfer the same moves to another individual — someone who wouldn’t have been able to pull off the challenging ballet or hip-hop routines — after just a few minutes of the target subject performing some standard motions for a camera.

A motion-synthesis method digitally transfers dance moves from one individual to another — and can test when a video has used it. (Photos courtesy the researchers)Caroline Chan, an undergraduate student at the time, was the lead author of the second paper, “Everybody Dance Now,” which describes a method for transferring video motion from one person to another. Given a source video of a person dancing, the researchers showed they could digitally transfer the same moves to another individual — someone who wouldn’t have been able to pull off the challenging ballet or hip-hop routines — after just a few minutes of the target subject performing some standard motions for a camera.

“Although our method is quite simple, it produces surprisingly compelling results,” the authors write — so compelling, they say, that they developed a companion forensics tool to test whether a given clip has been produced using their method.

The research on conversational gestures employed machine learning and hours of video footage to sync ten individuals’ speech patterns and hand and arm movements, then generate appropriate gestures to match new audio. The team, led by Ph.D. student Shiry Ginosar along with Owens, published their work, “Learning Individual Styles of Conversational Gesture,” in June. Jitendra Malik, professor of electrical engineering and computer sciences, was the principal investigator on this paper.

The paper came paired with a large video dataset of person-specific gestures for talk-show hosts John Oliver, Seth Myers, Ellen DeGeneres and others. In a similar fashion, the dance paper came bundled with a first-of-its-kind open-source dataset of videos that can be used for training and motion transfer. For Ginosar, who also contributed to the dance work, sharing this allows other researchers to better understand motion transfer and identify videos where it’s in use.

The counterfeiter vs. the investigator

The research coming out of Efros’ lab takes advantage of a relatively new concept in machine learning known as generative adversarial networks, or GANs. These systems use the interplay between synthesis and detection to produce more realistic synthesized images.

Think of it as pitting a counterfeiter against a police investigator, Efros says. GANs include both a generator, trying to make counterfeits, and a discriminator, like a detective, trying to spot them. The two sides optimize each other in an automated one-upmanship that is a boon to those looking to synthesize realistic digital content — and an adversary for those looking to detect hidden fakes.

Theoretically, this technology could reach a state of equilibrium where generated content becomes virtually indistinguishable from real content. For now, the detectors are ahead. But if an equilibrium is reached, Efros says, we’ll need to adapt by modifying our expectations about digital images and videos, akin to how we came to scrutinize scam letters created by laser printers back in the 1990s.

“Things that people trusted in the past, they realize that they cannot trust anymore,” he says. “I would argue that their trust in photographs has always been misplaced. It was never the truth. It was always what the photographer wanted you to see. So in some sense this is good that people are getting more awareness of this now, because photography and even film were always subjective, in many different subtle and not-so-subtle ways.”

Both Farid and Efros agree that the greatest risk we face as a society is not the proliferation of foolproof deepfakes per se, but rather the argument that anything could be fake, without the weight of a trusted voice — such as a news organization or social media company — to counteract it.

“To me, that is the broader threat here,” Farid says. “As we become a more polarized society, as we enter a world where more and more people get their news on Facebook, which of course is manipulating what you see, we are all going to have our own set of facts. Something will come out damaging for a candidate, that candidate will tell you it’s fake, the people who are opposed to the candidate will say it happened, and where are we left as a democracy? If we can’t agree on basic facts of what happened and didn’t happen, we’re in trouble.”